Introduction

Client and server are directly connected through a CAT6 cable on a dedicated gigabit interface.

The idea is to count how many packets the server can handle while minimising the "external" factors. Therefore, no switch or other hardware is placed between the sender and receiver.

Details of the test

- Sender sends queries from a pcap file using tcpreplay at various speeds

- Queries are mostly www.*.eu A queries (most likely NXDOMAIN or NS delegation answer)

- No DNSSEC

Machine specifications

Sender

- Server: HP ProLiant DL160 G5

- CPU: 2x Quad core (Intel(R) Xeon(R) CPU E5405 @ 2.00GHz)

- Memory: 4x 4GiB DDR2 (FB-DIMM DDR2 FB-DIMM Synchronous 667 MHz (1.5 ns))

- NIC: Broadcom Corporation NetXtreme BCM5722 Gigabit Ethernet PCI Express

- OS: Ubuntu 12.04 kernel 3.2.0 (minimal server install)

Receiver

- Server: Supermicro X8DTU

- CPU: 2x Quad core HT enabled (Intel(R) Xeon(R) CPU L5630 @ 2.13GHz)

- Memory: 6x 8GiB (DIMM DDR-3-1333 1066 MHz (0.9 ns))

- NIC: Intel Corporation 82576 Gigabit Network Connection

- OS: Ubuntu 12.04 minimal server install or FreeBSD 8.2

Procedure

A single pcap file with 6 000 000 UDP DNS query packets was created. We used tcpreplay on the client to send a number of packets to the server at a predefined rate. We calculated the reply rate by multiplying the reply ratio (answers recieved/queries sent) with the send rate. E.g. a send ratio of 100Kqps (100 000 queries per second) and a reply ratio of 80% would result in 80Kaps (80 000 answers per second).

The candidates

Here you can find the results of the benchmark tests between four different DNS implementations:

- Yadifa-1.0.0

- Nsd-3.2.10

- Knot-1.0.5

- Bind-9.9.1

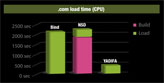

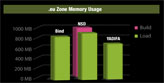

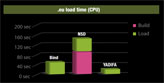

These four bar charts show the CPU load time and the zone memory usage for YADIFA, Bind and NSD.

The CPU load time graphs show the time the CPU needs to load the .eu and .com zones. Memory usage refers to the amount of RAM used once the zone has been fully loaded. The build time is the time required to compile the zone file for NSD.